| To watch the Ellison C. Pierce, Jr., MD, Memorial Safety Lecture in its entirety, please visit: https://www.apsf.org/asa-apsf-ellison-c-pierce-jr-md-memorial-lecturers/ |

This piece summarizes the content of the 2020 Ellison C. Pierce, Jr., MD, Memorial Patient Safety Lecture, which was presented on October 3, 2020, at the American Society of Anesthesiologists Annual Meeting (held virtually). It highlights the conflict between quality and safety, and is a call to action for anesthesia professionals to recognize their important role in improving health care safety. My presentation first reviews the emerging role of the anesthesia professional in today’s health care safety environment. I then explore an important yet under-appreciated consequence of unsafe work environments—the impact of dysfunctional systems on clinicians. Next, I talk about the push for greater health care quality in the U.S., followed by a summary of conflicting approaches to safety and quality efforts. Finally, I review principles in human factors engineering and human-centered design which may contribute to the solution of this dilemma.

Patient Safety

Based on my review of the literature, it appears that about one-half of perioperative deaths are preventable. While primarily anesthesia-related mortality is quite low, surgical mortality is at least 100-fold higher. Thus, anesthesia professionals can and must play a greater role in reducing not just anesthesia, but surgery-related morbidity and mortality. Toward this goal, for example, we need to take more responsibility for reducing surgical infections through correct and timely prophylactic antibiotics, as well as better sterile technique by anesthesia professionals when administering intravenous drugs, especially during induction.1 Maintaining effective mean blood pressure throughout perioperative care is another way anesthesia professionals can improve surgical outcomes.

More than two decades ago, I introduced to health care the concept of a “non-routine event” (or NRE). We define an NRE as anything that’s undesirable, unusual, or surprising for a particular patient in their specific clinical situation. My colleagues and I have demonstrated that NREs: 1) are common—ranging from 20–40% in various perioperative settings; 2) are multifactorial; 3) can contribute to and/or be associated with adverse patient outcomes and, importantly, 4) provide addressable evidence for improving defective processes and technology within care systems.2

A key problem with the current view of patient safety (Safety 1.0) in most health care organizations is its focus on detecting and analyzing adverse events or aggregate poor outcomes to drive mitigation or improvement. While useful, the effectiveness of such an approach is limited due to hindsight bias and its inability to provide sufficient insight as to how best to prevent future adverse events. So, instead, in health care as in other industries, patient safety professionals need to study how experienced clinicians are successful despite the dysfunctional processes and systems in which they must work and then design processes and technology to support and foster these resilient behaviors (Safety 2.0).

Clinician Safety

Degraded clinician well-being and burnout is more likely when a hospital unduly emphasizes production, has dysfunctional processes and technology that predispose to unsafe care, or has a culture and leaders that inadequately understand and support front-line clinicians’ needs. Studies show that clinician burnout is associated with adverse effects not only on the clinicians, but on patient safety and organizational performance.3 Further, many of the system factors associated with an increased risk of burnout are the same factors associated with the risk of NREs and preventable adverse events.

Safety vs. Quality

Data suggest that health care in the United States, when compared with other developed countries, is generally of lower quality, is often less safe, and has a higher proportion of total expenditures going toward activities that don’t directly benefit patients (see numerous articles and figures at www.commonwealthfund.org). Thus, there is tremendous pressure to increase value in health care, here defined as the quality of the care delivered divided by the cost of providing that care. Because cost is the dominator in the value equation, the effectiveness and efficiency of care have become a predominant focus in most organizations’ quality initiatives.

Table 1 shows the contrasts between an organization that is primarily production- or value-focused versus one where safety and reliability are the predominant focus. I modified this table from the work of Landau and Chisholm.4 To summarize just a few of the contrasts, a production focus emphasizes optimization, wanting to have just the right amount of personnel, tools, and supplies available just when they are needed. In contrast, safety or high reliability organizations want built-in redundancy and have a “just in case” mentality. The production organization treats adverse events as anomalies while the safety organization views adverse events as valuable information about potential system dysfunctions. The production organization tends to have a “shame and blame” culture, whereas, in the safety organization, those who report errors or issues are praised and even rewarded. Thus, with a production orientation, the system is more error prone while in a safety organization, it is error tolerant, and more importantly, will be resistant to serious accidents through better error detection and recovery.

Table 1: Production vs. Safety Focused Health Care Organizations.

| Production Focus | Safety/Reliance |

| Optimization (Just in Time) | Redundancy (Just in Case) |

| Promote standardization | Accept diversity/variability |

| Resistant to change | Adaptive and flexible |

| Adverse events as anomalies | Adverse events as information |

| Optimism about outcomes | Pessimism about outcomes |

| Shoot the messenger | Reward the messenger |

| Error prone | Error tolerant |

Conflict between production and safety often occurs in procedural areas which are high cost (and potentially high revenue). Here, there is constant organizational pressure to improve productivity (i.e., throughput) and financial performance. Both of these can be easily measured whereas we can only infer safety—when accidents happen, we know we are in unsafe territory. However, when there have been no accidents, the organization can have a false sense of safety. There will thus be a tendency to “push the [safety] envelope” over time increasing the risk of harm events. To date, the only perioperative “safety meter” we have is individual clinicians—your willingness to speak up, to stop the line, and to advocate for patient and clinician safety.

Design for Safety. Human factors engineering (or HFE) provides methods to design processes, technology and systems to achieve higher levels of safety and quality. HFE is the scientific and practical discipline of understanding and improving systems to increase overall safety, effectiveness, efficiency and user satisfaction.5

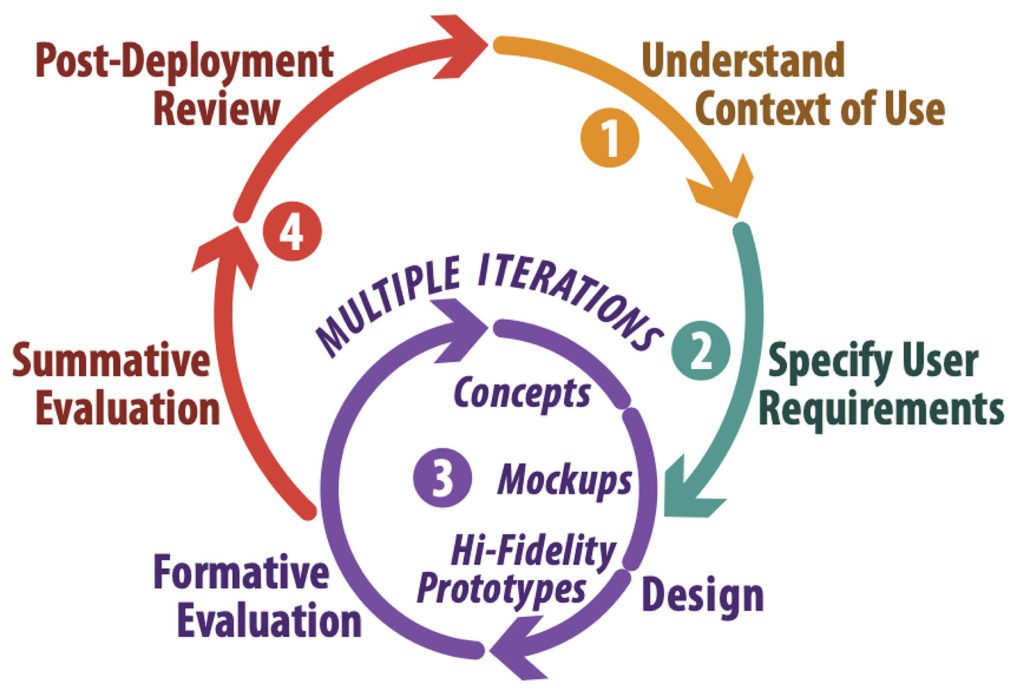

The Human- (or User) Centered Design Cycle (Figure 1) is how HFEs design, evaluate and deploy any new or revised tool, technology, process, or system.

The cycle starts with gaining a full understanding of the problem you’re trying to address. This user research leads to a full description of users’ needs. Next, you specify the use-related design requirements. Working through multiple iterations of design and evaluation yields an optimized product or intervention that meets the desired requirements. You then assess, prior to full implementation, whether the resulting product or intervention actually meets your users’ needs. In the presentation, I provided examples of each phase of the human-centered cycle based on our prior research (see, for example, references 6–9).

POPTEC (PeOple, Processes, Technology, Environment, and Culture) is how HFEs think about the performance-shaping factors that affect the risk of non-routine and adverse events. POPTEC thus provides a framework, not only for user research, but to guide design and evaluation throughout the entire HCD cycle.5

Conclusion

To improve patient safety, the health care organization needs to create resilient human-centered systems. Individuals and teams should be trained in serious event management. Standardization is important for both quality and safety. But, especially for safety, it must be flexible and open to continual refinement. Processes and technology need to be engineered to be safety-oriented, error-tolerant, and facilitate error recovery. A robust event-reporting system will encourage reporting and provide feedback. All meaningful events need to be analyzed to identify the most important safety problems. Then, potential interventions should be developed and evaluated using HFE principles. This difficult work must be multidisciplinary and collaborative and should fully engage all relevant clinicians and other stakeholders. Finally, an organization needs enlightened leaders who actually understand and prioritize patient and worker safety, and who put real effort into creating a robust safety culture.

In closing, anesthesia professionals must not simply be “the people who put people to sleep.” We have the training, knowledge, and skills that make us uniquely suited to be the safety leaders in our organizations. To have an impact, we need to take a broader view of our role in health care to fully realize our potential to improve both safety and quality.

Matthew B. Weinger, MD, MS, is the Norman Ty Smith Chair in Patient Safety and Medical Simulation and professor of anesthesiology, biomedical informatics and medical education at the Vanderbilt University School of Medicine, Nashville, TN. He also is the director, Center for Research and Innovation in Systems Safety, Vanderbilt University Medical Center, Nashville, TN.

Dr. Weinger is a founding shareholder and paid consultant of Ivenix Corp., a new infusion pump manufacturer. He also received an investigator-initiated grant from Merck to Vanderbilt University Medical Center to study clinical decision making.

References

- Loftus RW, Koff, MD, Birnbach DJ. The dynamics and implications of bacterial transmission events arising from the anesthesia work area. Anesth Analg. 2015;120:853–860.

- Liberman, JS, Slagle JM, Whitney, G, et al. Incidence and classification of nonroutine events during anesthesia care Anesthesiology. 2020;133:41–52.

- Committee on Systems Approaches to Improve Patient Care by Supporting Clinician Well-Being, National Academies of Science, Engineering, and Medicine. Taking action against clinician burnout: a systems approach to professional well-being. Washington, DC: The National Academies Press, October 2019, 334 pp. doi.org/10.17226/25521. ISBN: 978-0-309-49547-9.

- Landau M, Chisholm D. The arrogance of optimism: notes on failure-avoidance management. J Contingencies Crisis Manage. 1995;3:67.

- Weinger MB, Wiklund M, Gardner-Bonneau D. (editors): Handbook of human factors in medical device design. Boca Raton, FL: CRC Press, 2011.

- Anders S, Miller A, Joseph P, et al. Blood product positive patient identification: comparative simulation-based usability test of two commercial products. Transfusion. 2011;51: 2311–2318.

- Anders S, Albert R, Miller A, et al. Evaluation of an integrated graphical display to promote acute change detection in ICU patients. Inter J Med Inform. 2012; 81: 842–51.

- Weinger M, Slagle, J, Kuntz A, et al. A multimodal intervention improves post-anesthesia care unit handovers. Anesth Analg. 2015;121:957–971.

- Weinger MB, Banerjee A, Burden A, et al. Simulation-based assessment of the management of critical events by board-certified anesthesiologists. Anesthesiology. 2017;127:475–89.

Issue PDF

Issue PDF